Blog #0186: What We Let Machines Do

Source: Photo by Jens Lelie on Unsplash

Source: Photo by Jens Lelie on Unsplash

[ED: A framework for thinking about where we draw the line on AI autonomy, from spreadsheets to self-driving cars. Overall: 3/5 hats.]

[ED: A framework for thinking about where we draw the line on AI autonomy, from spreadsheets to self-driving cars. Overall: 3/5 hats.]

The Autonomy Ladder

We assess AI not by what it can do, but by what we permit it to do without supervision. Technical capability and social acceptance don't move in lockstep. The gap between them defines the current debate.

Four categories of AI capability, arranged by how much autonomy we grant them:

- analysis

- prediction

- generation, and

- behaviour

Think of them as rungs on a ladder. Each rung represents a different answer to the question: "At what point must a human intervene?"

Analysis sits at the bottom. Machines have performed autonomous analysis for half a century. No-one objects to a spreadsheet calculating a sum or a database running a query. The output requires human interpretation. The machine holds no opinion about what the numbers mean.

Prediction occupies the next rung. A weather model predicts rain. You decide whether to cancel the picnic. A credit model predicts default risk, and a loan officer (in theory) decides whether to approve. The arrangement holds as long as the human retains final authority.

Generation marks contested ground. Using AI to apply a Photoshop filter draws no objection---the tool transforms what a human created. Using AI to generate an image in another artist's style attracts criticism. The technical process is similar in both cases. The argument is about authorship, not algorithms.

Behaviour sits at the top, split into two zones. Digital behaviour---AI agents that send emails, execute trades, manage workflows---attracts cautious acceptance. Misfired emails can be retracted, bad trades reversed. Embodied behaviour is another thing entirely. A vehicle that misjudges a stopping distance does not get a second attempt. A surgical robot that cuts the wrong tissue cannot uncommit the action.

We accept algorithmic trading but resist autonomous vehicles. The dividing line is whether mistakes can be undone.

The Permanence Problem

Generated content has a problem that prediction and analysis don't. It sticks around. It enters archives, becomes training data, gets cited as reference, and accumulates authority it never earned.

Models trained on AI-generated content can amplify the errors baked into it. Legal and ethical frameworks built for human-authored work don't cope well with synthetic content. Who made this? Hard to say. Where did it come from? Harder still.

A Photoshop filter transforms existing material, and you can still point to the original author. A text-to-image generator creates something with no clear original. Authorship dissolves into prompts, training data, and model weights.

The Behaviour Paradox

Look at commercial aviation. Autopilot systems have operated safely for decades. Modern aircraft can execute fully automated landings in conditions where human pilots cannot. Yet passengers prefer human pilots for takeoff and landing---the phases where automation performs most reliably.

Now look at autonomous vehicles. Edge cases proliferate: construction zones, unusual weather, unpredictable pedestrians. Yet some manufacturers treat the problem as nearly solved. Familiarity and institutional trust matter as much as raw technical performance here. Aviation built its safety record over a century of incremental automation and transparent incident investigation. Autonomous vehicles are trying to compress that into a decade, in less controlled environments.

If we stuck with the ladder, we'd place both aviation autopilot and autonomous vehicles on the same "behaviour" rung. That obscures more than it reveals. One is mature, trusted, operating in controlled airspace with professional operators. The other is experimental, contested, operating on public roads with untrained passengers. Same category, different situations.

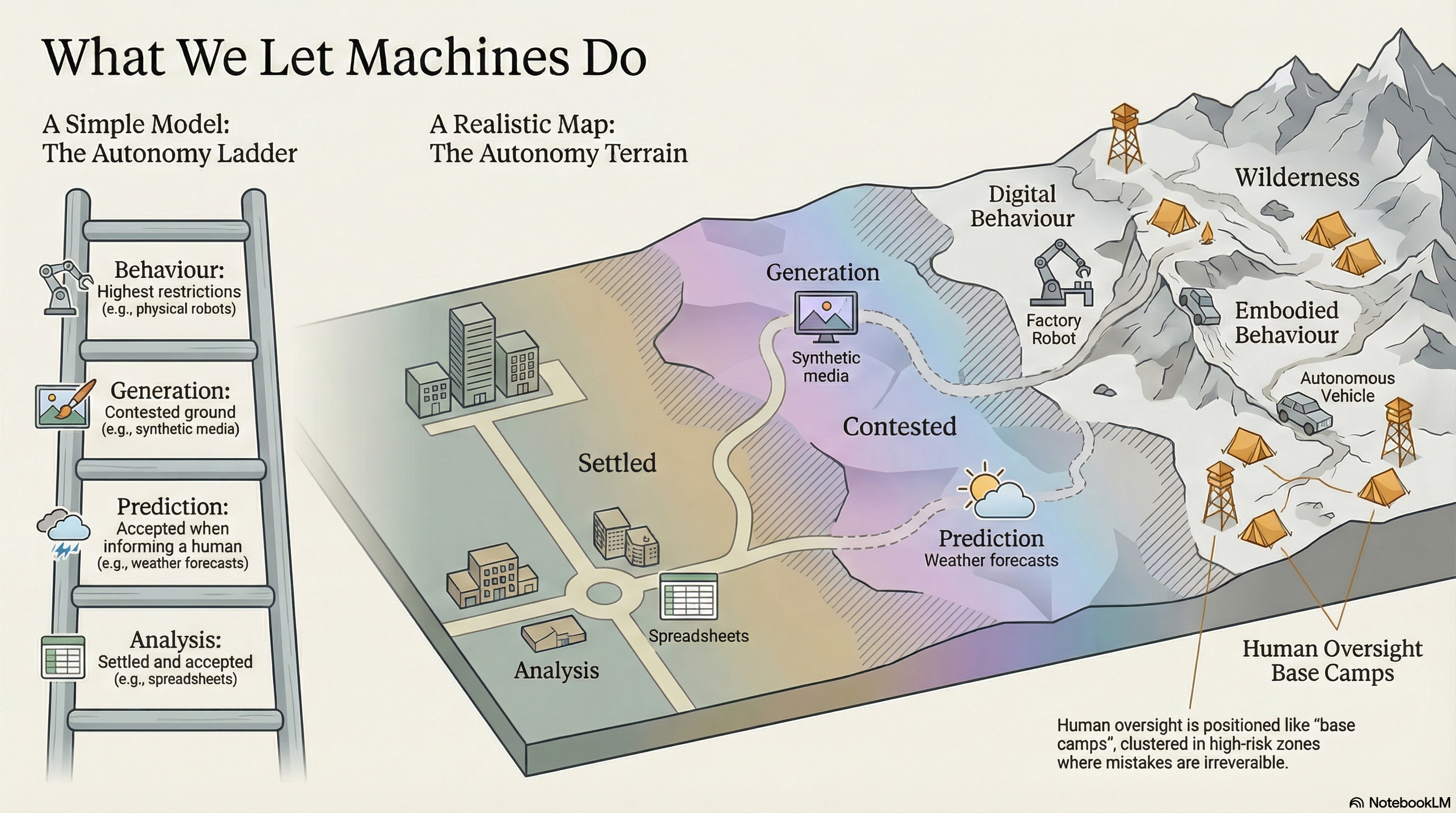

From Ladder to Terrain

The ladder served its purpose. Simple frameworks break when reality fails to fit. What we actually have is terrain:

Source: Diagram courtesy of Google's NotebookLM

Source: Diagram courtesy of Google's NotebookLM

[ED: Crikey, it's not without errors, but NotebookLM's capabilities are really something else.]

Spreadsheets, databases, statistical tools. That's settled ground. Nobody debates whether to let machines calculate. Generation is more contested---AI-assisted editing is becoming routine while synthetic media stays disputed. And then there's the wilderness. Robots in homes. Vehicles on arbitrary roads. AI agents making consequential decisions without oversight.

What the ladder misses is that progress happens unevenly. Factory robots achieved sophisticated autonomy decades before generative AI became useful. Surgical robots operate with high autonomy in narrow contexts while warehouse robots struggle with novelty. The digital/embodied split makes more sense as terrain than as rungs---different conditions, different equipment, different tolerance for risk.

The Hypervisor Layer

One model for managing AI autonomy borrows from computing---the hypervisor. A hypervisor manages virtual machines, allocating resources and stepping in when something goes wrong. Applied to AI, this suggests a layered pattern. Agents operate autonomously within defined boundaries. A coordination layer manages interactions between them. A human layer sits above both, called in when either lower layer hits something it can't handle.

Think of it as base camps across the terrain. Humans position themselves at critical junctions, ready to intervene when automated systems hit conditions they weren't built for. The base camps cluster more densely in the embodied regions, where unrecoverable errors cost the most.

The practical challenge is calibration. Too sensitive, and humans drown in alerts. Too permissive, and failures go unnoticed. The boundary conditions matter more than the architecture.

The Changing Map

The terrain is not static. Prediction is already bleeding into decision---algorithmic systems that nominally "recommend" often effectively "decide" because humans rubber-stamp without review. The human-in-the-loop becomes a human-in-the-letterbox: present but not participating.

Generation will follow. As synthetic content becomes indistinguishable from human work and the cost of production falls toward zero, practical barriers to autonomous generation erode. Social and legal barriers may persist longer, but they face pressure.

Behaviour presents the hardest terrain. Controlled environments---factories, warehouses, dedicated corridors---will see paths cleared faster than public roads, homes, or hospitals. The stakes of premature trust in embodied systems are measured in injuries and deaths, not inconvenience.

Some territory will stay permanently off-limits. Not a technical limitation---a deliberate choice.

Navigating Forward

Three principles:

Match oversight to terrain. Well-mapped regions need less supervision. Embodied regions need most of all. Uniform oversight everywhere wastes attention where it is not needed and dilutes it where it is.

Build infrastructure, not just paths. Escalation channels must function reliably---systems that detect anomalies, route decisions to appropriate humans, and ensure those humans have context. In embodied systems, this infrastructure must operate faster than human reaction time, with safety margins that assume escalation will sometimes fail.

Accept that the map will change. And the map is not the territory [Korsybski]. Any fixed policy about what AI should do autonomously will become obsolete. The goal is not permanent boundaries but institutions capable of redrawing them.

We started with a ladder because ladders are easy to understand. We end with terrain because terrain is what we face.

Regards,

M@

Originally posted on matthewsinclair.com and cross-posted on Medium.

[ED: If you'd like to sign up for this content as an email, click here to join the mailing list.]