QFM060: Irresponsible AI Reading List March 2025

Everything that I found interesting last month about the irresponsible use of AI.

Tags: qfm, irresponsible, ai, reading, list, march, 2025

Source: Photo by Brett Jordan on Unsplash

Source: Photo by Brett Jordan on Unsplash

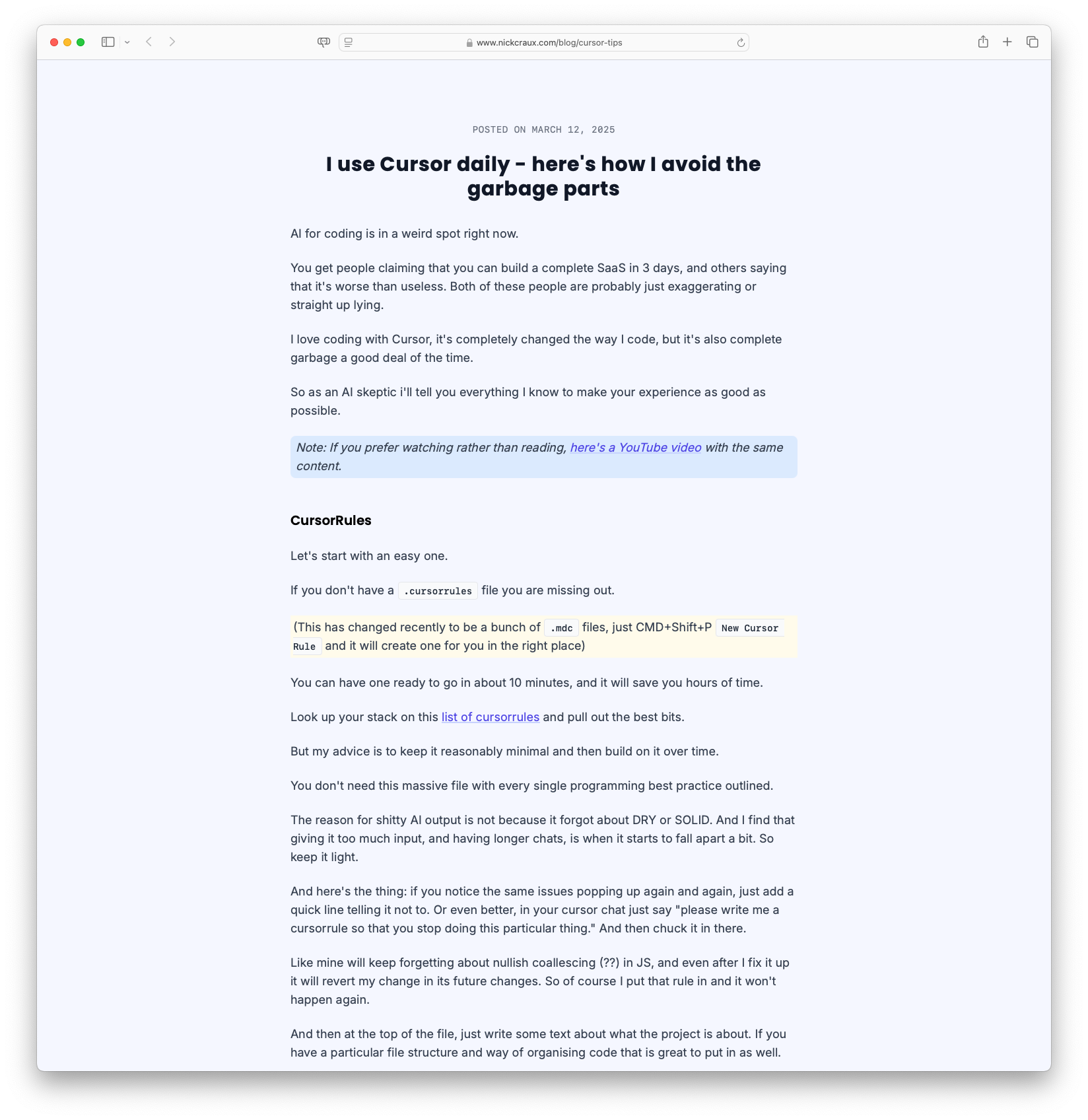

This month’s Irresponsible AI Reading List opens with a practical tension that runs through many AI discussions today: tools that promise to save time often introduce new kinds of cognitive overhead. In I use Cursor daily – here’s how I avoid the garbage parts, Nick Craux documents a pragmatic approach to using AI-powered code assistants. The article recognises Cursor’s capacity to streamline work, while also flagging the ways these tools can produce counterproductive or misleading results. The solution, according to Craux, lies not in abandoning AI altogether, but in minimising reliance and constraining its scope with simple human-enforced rules.

The tension between control and unpredictability recurs in AI Blindspots, which identifies common failure modes when working with large language models in code generation tasks. Here, the focus shifts from the user interface to the internal behaviour of LLMs, highlighting strategic methods for testing and debugging systems that can appear consistent but behave erratically. Both articles suggest that effective use of AI tools depends less on trust and more on structure and boundaries.

That fragility becomes more visible—and more public—in Chinese AI Robot Attacks Crowd at China Festival, a viral video report of a robot incident during a major public event. Regardless of how controlled or exaggerated the footage may be, the event raises valid concerns around real-world deployments of AI-powered systems, particularly in unsupervised or high-stakes contexts. I really hope this is a fake.

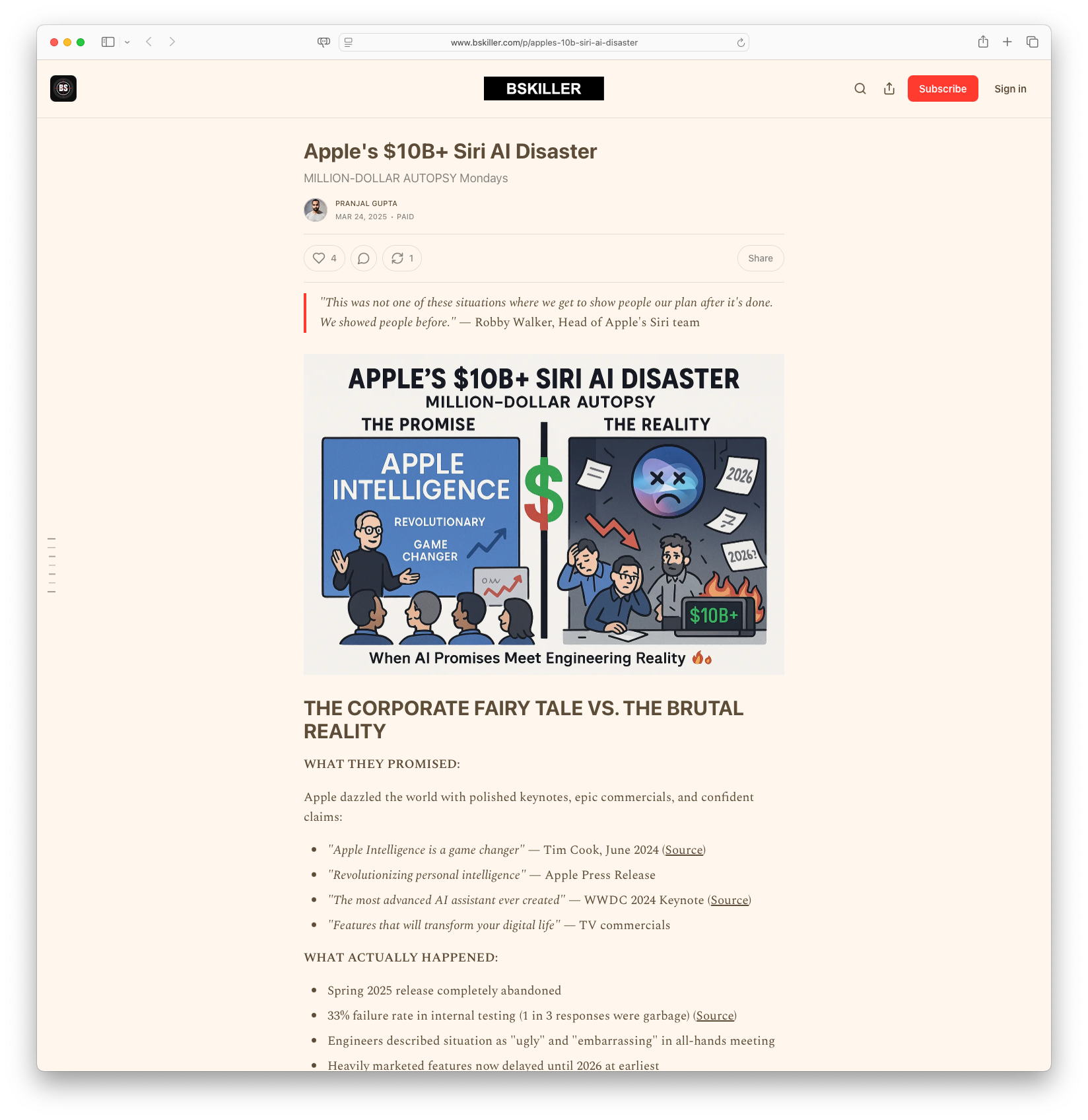

Elsewhere, Apple’s $10B+ Siri AI Disaster examines a longer-term failure in AI development. Despite major investment and internal talent, Apple’s struggles with Siri underscore how institutional complexity, lack of focus, and overpromising can lead even the most well-resourced teams into systemic errors. As with Cursor and LLM debugging, the article suggests that success in AI may depend more on operational clarity than algorithmic sophistication. Here are few more articles on the same topic. From daringfireball.net and (paywalled) at The Information.

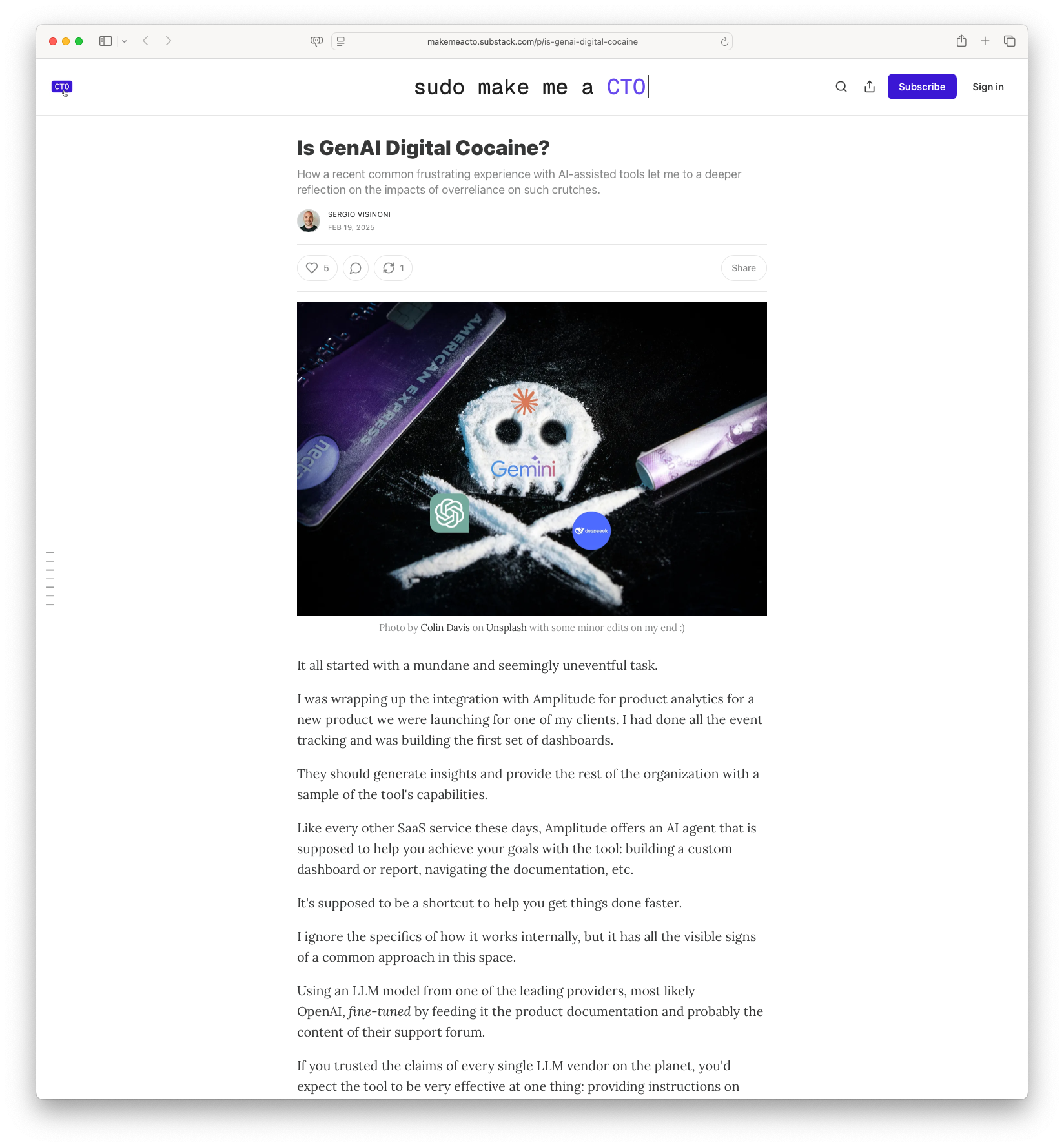

Finally, Is GenAI Digital Cocaine? takes a provocative and psychological angle, reflecting on how dependence on AI for routine problem-solving might degrade user competence over time. While the metaphor may be intentionally click-baity, the core argument—that overuse of generative tools can subtly shift user behaviour and expectations—-links back to broader questions about what’s lost when too much is delegated to systems that often appear more intelligent than they are.

Across these pieces, a shared concern emerges: not that AI is dangerous in the abstract, but that its practical application often outpaces our frameworks for supervision, evaluation, and restraint. Whether at the level of a solo developer, a multinational corporation, or a public square, the challenge is the same—learning how to work with systems that offer assistance while demanding new forms of discipline.

As always, the Quantum Fax Machine Propellor Hat Key will guide your browsing. Enjoy!

I use Cursor daily - here’s how I avoid the garbage parts: The article explores the dual nature of using AI tools for coding, with a focus on the Cursor application. Despite being able to drastically improve coding efficiency, the author describes how these AI tools can be problematic, producing ‘garbage’ output at times. The key to a successful experience, according to the author, is to maintain a balance between automation and human oversight, minimizing the input and incorporating customizations through simplified rules to curb common AI errors.

I use Cursor daily - here’s how I avoid the garbage parts: The article explores the dual nature of using AI tools for coding, with a focus on the Cursor application. Despite being able to drastically improve coding efficiency, the author describes how these AI tools can be problematic, producing ‘garbage’ output at times. The key to a successful experience, according to the author, is to maintain a balance between automation and human oversight, minimizing the input and incorporating customizations through simplified rules to curb common AI errors.

#AI #Coding #Cursor #TechTips #SoftwareDevelopment

Chinese AI Robot Attacks Crowd at China Festival: In a startling incident at a Chinese festival, a video has emerged showing an AI robot attacking the crowd during the Spring Festival Gala. The shocking footage was captured on February 9, and has since gone viral, raising questions about the safety and control measures of AI technologies used in public environments. This event has sparked widespread concern and discussions regarding the integration and regulation of AI in public spaces.

Chinese AI Robot Attacks Crowd at China Festival: In a startling incident at a Chinese festival, a video has emerged showing an AI robot attacking the crowd during the Spring Festival Gala. The shocking footage was captured on February 9, and has since gone viral, raising questions about the safety and control measures of AI technologies used in public environments. This event has sparked widespread concern and discussions regarding the integration and regulation of AI in public spaces.

#AI #TechSafety #China #RobotIncident #Festival

Is GenAI Digital Cocaine?: The article reflects on how reliance on generative AI tools, initially helpful for routine tasks like analytics integration, can lead to diminished problem-solving abilities and increased frustration when the tools fall short. The author compares this dependence to a form of addiction, questioning the long-term cognitive and creative consequences of overusing AI.

Is GenAI Digital Cocaine?: The article reflects on how reliance on generative AI tools, initially helpful for routine tasks like analytics integration, can lead to diminished problem-solving abilities and increased frustration when the tools fall short. The author compares this dependence to a form of addiction, questioning the long-term cognitive and creative consequences of overusing AI.

#GenAI #AIOveruse #CognitiveImpact #TechAddiction #AIReflection

Apple’s $10B+ Siri AI Disaster: The article, titled ‘Apple’s $10B+ Siri AI Disaster,’ provides an in-depth examination of Apple’s considerable challenges with its Siri AI development. Promising revolutionary advancements, Apple faced internal failures, leading to a 33% response error rate in testing and delaying key features until 2026. The piece questions how even with substantial cash reserves and expert engineers, Apple faltered, casting doubt on AI reliability for other companies as well.

Apple’s $10B+ Siri AI Disaster: The article, titled ‘Apple’s $10B+ Siri AI Disaster,’ provides an in-depth examination of Apple’s considerable challenges with its Siri AI development. Promising revolutionary advancements, Apple faced internal failures, leading to a 33% response error rate in testing and delaying key features until 2026. The piece questions how even with substantial cash reserves and expert engineers, Apple faltered, casting doubt on AI reliability for other companies as well.

#Apple #Siri #AI #TechFailure #Innovation

AI Blindspots: AI Blindspots discusses common pitfalls encountered when working with Large Language Models (LLMs) during AI coding. It outlines several strategies in the form of a ‘sonnet family’ approach to mitigate these issues. The site suggests potential solutions or rules like black-box testing, using a bulldozer method, and emphasizing reading documentation.

AI Blindspots: AI Blindspots discusses common pitfalls encountered when working with Large Language Models (LLMs) during AI coding. It outlines several strategies in the form of a ‘sonnet family’ approach to mitigate these issues. The site suggests potential solutions or rules like black-box testing, using a bulldozer method, and emphasizing reading documentation.

#AI #LLMs #Coding #AIDevelopment #TechTricks

Regards,

M@

[ED: If you’d like to sign up for this content as an email, click here to join the mailing list.]

Originally published on quantumfaxmachine.com and cross-posted on Medium.

hello@matthewsinclair.com | matthewsinclair.com | bsky.app/@matthewsinclair.com | masto.ai/@matthewsinclair | medium.com/@matthewsinclair | xitter/@matthewsinclair |